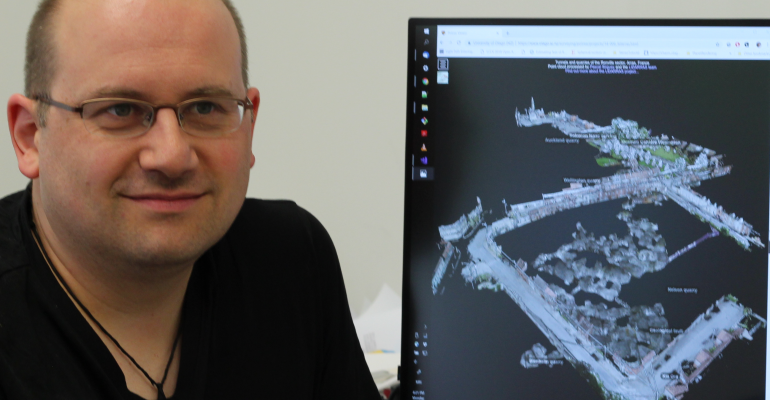

Dr Steve Mills

Helping surgeons see inside brains

It’s dark inside your brain, and if you shone a torch in there, the tissues would also be soft, slippery and shiny-looking.

Those are complicating factors when you’re trying to create a 3D computer model using cameras, because when both the light and the camera are moving at the same time, untangling the two is very difficult.

If you’re a brain surgeon, being able to see an on-screen 3D model of the structures inside someone’s skull could be very helpful – giving you context about what’s happening around and behind your endoscope’s camera as you move it around. But science hasn’t been able to help with that. The moving light problem is so complicated that current software can only allow 3D modelling of things that are static.

SfTI seed project researcher and Senior Lecturer at the University of Otago Dr Steven Mills says there’s also a big leap between 3D modelling of something that looks like it’s real, and 3D modelling of actual reality.

“The disadvantage of simulation is that it’s doomed to success. You can get rid of all the problems of the real world like shadows and shine.

“But if you’re inside someone’s brain, you need to know exactly where the structures are – not roughly where they are. Brain surgery’s quite tricky.”

Steven and his collaborator Dr Pascal Sirguey at Otago’s School of Surveying are working on this problem, which was triggered by a request from an actual New Zealand brain surgeon. If successful, it could lead to accurate 3D models with far reaching applications from surgery to underwater robotic examination of ship wrecks or oil platforms – essentially anywhere that’s too dark to see.

At the centre of the work is Steven’s realisation that in theory, light moving as you move is actually a good thing for 3D modelling.

“That’s really what 3D is. You’re seeing light differently as you move to perceive depth.

“In the past, being able to reproduce the way light moves with the eye has been seen as the problem, but we think it should be an advantage. And until you’ve got the right problem, you don’t know what questions to ask.”

Steven found the work needed to start with a relatively simple surface and enlisted Pascal’s help.

The uniform surface of tunnels simplifies the problem down to its core

As part of the WWI centenary celebrations, Pascal was allowed into the Arras tunnels in France that had been extended in 1916 for over 4000 metres largely by New Zealanders working 24/7 over five months. Pascal’s team took photos of the surfaces using flash photography from many vantage points, showing the changing light as the camera moved closer and further away from the walls along the length of the tunnel section.

“The surface of the tunnels is quite uniform, so it simplifies the modelling problem down to its core,” Steven says.

As well as the tunnel data sets, the team are also working with more mundane scenes, like the corners where their office walls meet, which are simple surfaces without shadows and reflections.

The team’s computer modelling approach is to start with achieving a good sparse model of the environment, then work toward a better-defined one with thousands of points correctly placed in space, and then move to a full 3D model displaying millions of accurately placed dots of light, which give the scene surfaces that the eye can interpret as the real thing.

“We’re trying to build a unique algorithm or recipe that works when you’ve got the moving light problem. Then we’ll want to know how efficient it is, how accurate, how long it takes, and what the limits are,” Steven says.

“Because reality is always inconvenient.”